What are eigenvalues and eigenvectors, and why are they important?

Eigenvalues and eigenvectors are mathematical concepts that are used to describe the behavior of linear transformations. A linear transformation is a function that takes a vector as input and outputs another vector. Eigenvectors are the vectors that are not changed by the linear transformation, except for a scaling factor. Eigenvalues are the scaling factors that correspond to the eigenvectors.

Eigenvalues and eigenvectors are important because they can be used to understand the behavior of a linear transformation. For example, the eigenvalues of a matrix can be used to determine whether the matrix is invertible. The eigenvectors of a matrix can be used to find the principal axes of the transformation.

Eigenvalues and eigenvectors are used in a variety of applications, including:

- Linear algebra

- Quantum mechanics

- Control theory

- Signal processing

- Image processing

Eigenvalues and Eigenvectors

Eigenvalues and eigenvectors are mathematical concepts that are used to describe the behavior of linear transformations. They are important because they can be used to understand the behavior of a linear transformation, such as whether it is invertible or not, and to find the principal axes of the transformation.

- Eigenvalues: Scaling factors that correspond to eigenvectors.

- Eigenvectors: Vectors that are not changed by a linear transformation, except for a scaling factor.

- Linear transformation: A function that takes a vector as input and outputs another vector.

- Invertible matrix: A matrix that has an inverse matrix.

- Principal axes: The axes that are aligned with the eigenvectors of a linear transformation.

- Applications: Eigenvalues and eigenvectors are used in a variety of applications, including linear algebra, quantum mechanics, control theory, signal processing, and image processing.

For example, in quantum mechanics, eigenvalues are used to find the energy levels of an electron in an atom. In control theory, eigenvalues are used to design control systems that are stable. In signal processing, eigenvalues are used to analyze the frequency components of a signal. And in image processing, eigenvalues are used to denoise images.

1. Eigenvalues

Eigenvalues and eigenvectors are two important concepts in linear algebra. Eigenvalues are the scaling factors that correspond to eigenvectors. Eigenvectors are the vectors that are not changed by a linear transformation, except for a scaling factor.

Eigenvalues and eigenvectors can be used to understand the behavior of a linear transformation. For example, the eigenvalues of a matrix can be used to determine whether the matrix is invertible. The eigenvectors of a matrix can be used to find the principal axes of the transformation.

- Eigenvalues and diagonalizability: A matrix is diagonalizable if and only if it has n linearly independent eigenvectors. In this case, the eigenvalues are the diagonal entries of the diagonalized matrix.

- Eigenvalues and invertibility: A matrix is invertible if and only if all of its eigenvalues are nonzero. If an eigenvalue is zero, then the corresponding eigenvector is in the null space of the matrix, and the matrix is not invertible.

- Eigenvalues and stability: The eigenvalues of a matrix determine the stability of the corresponding linear transformation. If all of the eigenvalues are negative, then the transformation is stable. If any of the eigenvalues are positive, then the transformation is unstable.

- Eigenvalues and applications: Eigenvalues and eigenvectors are used in a variety of applications, including:

- Linear algebra

- Quantum mechanics

- Control theory

- Signal processing

- Image processing

Eigenvalues and eigenvectors are powerful tools for understanding the behavior of linear transformations. They have a wide range of applications in science and engineering.

2. Eigenvectors

Eigenvectors are closely connected to eigenvalues and eigenvectors problems. Eigenvectors are the vectors that remain unchanged by a linear transformation, except for a scaling factor. This property makes eigenvectors useful for understanding the behavior of linear transformations.

- Eigenvectors and diagonalizability: A matrix is diagonalizable if and only if it has n linearly independent eigenvectors. In this case, the eigenvectors form a basis for the vector space, and the matrix can be represented as a diagonal matrix with the eigenvalues on the diagonal.

- Eigenvectors and invertibility: A matrix is invertible if and only if all of its eigenvalues are nonzero. If an eigenvalue is zero, then the corresponding eigenvector is in the null space of the matrix, and the matrix is not invertible.

- Eigenvectors and stability: The eigenvalues of a matrix determine the stability of the corresponding linear transformation. If all of the eigenvalues are negative, then the transformation is stable. If any of the eigenvalues are positive, then the transformation is unstable.

- Eigenvectors and applications: Eigenvectors are used in a variety of applications, including:

- Linear algebra

- Quantum mechanics

- Control theory

- Signal processing

- Image processing

Eigenvectors are powerful tools for understanding the behavior of linear transformations. They have a wide range of applications in science and engineering.

3. Linear transformation

A linear transformation is a function that takes a vector as input and outputs another vector. Linear transformations are important in a variety of applications, including computer graphics, physics, and engineering.

Eigenvalues and eigenvectors are two important concepts in linear algebra. Eigenvalues are the scaling factors that correspond to eigenvectors. Eigenvectors are the vectors that are not changed by a linear transformation, except for a scaling factor.

Eigenvalues and eigenvectors can be used to understand the behavior of a linear transformation. For example, the eigenvalues of a matrix can be used to determine whether the matrix is invertible. The eigenvectors of a matrix can be used to find the principal axes of the transformation.

The connection between linear transformations and eigenvalues and eigenvectors is that eigenvalues and eigenvectors can be used to characterize linear transformations. For example, the eigenvalues of a linear transformation are the roots of its characteristic polynomial. The eigenvectors of a linear transformation are the vectors that span the eigenspace of the transformation.

Understanding the connection between linear transformations and eigenvalues and eigenvectors is important for a variety of reasons. First, it provides a way to understand the behavior of linear transformations. Second, it provides a way to solve systems of linear equations. Third, it provides a way to find the principal axes of a linear transformation.

4. Invertible matrix

An invertible matrix is a square matrix that has a multiplicative inverse. That is, there exists another square matrix such that their product is the identity matrix. Invertible matrices are important in a variety of applications, including solving systems of linear equations, finding eigenvalues and eigenvectors, and computing matrix inverses.

- Connection to eigenvalues and eigenvectors

The eigenvalues of a matrix are the roots of its characteristic polynomial. The eigenvectors of a matrix are the vectors that span the eigenspace of the transformation. For a matrix to be invertible, all of its eigenvalues must be nonzero. This is because the inverse of a matrix is given by the following formula: $$A^{-1} = \frac{1}{\det(A)}C^T$$ where $A$ is the original matrix, $\det(A)$ is its determinant, and $C^T$ is the transpose of its cofactor matrix. If any of the eigenvalues of $A$ are zero, then $\det(A) = 0$, and the matrix is not invertible.

- Applications

Invertible matrices have a variety of applications, including:

- Solving systems of linear equations

- Finding eigenvalues and eigenvectors

- Computing matrix inverses

- Computer graphics

- Physics

- Engineering

Invertible matrices are a fundamental concept in linear algebra. They have a variety of important applications in mathematics, science, and engineering.

5. Principal axes

Principal axes are important in a variety of applications, including computer graphics, physics, and engineering. For example, in computer graphics, principal axes are used to align objects with the camera's coordinate system. In physics, principal axes are used to find the moments of inertia of an object. And in engineering, principal axes are used to design structures that are resistant to bending and torsion.

The connection between principal axes and eigenvalues and eigenvectors is that the eigenvectors of a linear transformation are the directions along which the transformation scales vectors. The eigenvalues of a linear transformation are the scaling factors. Therefore, the principal axes of a linear transformation are the axes that are aligned with the eigenvectors of the transformation.

Understanding the connection between principal axes and eigenvalues and eigenvectors is important for a variety of reasons. First, it provides a way to understand the behavior of linear transformations. Second, it provides a way to solve systems of linear equations. Third, it provides a way to find the principal axes of a linear transformation.

6. Applications

Eigenvalues and eigenvectors are two important concepts in mathematics that have a wide range of applications in science and engineering. In this section, we will explore the connection between eigenvalues and eigenvectors and their applications in various fields.

In linear algebra, eigenvalues and eigenvectors are used to study the behavior of linear transformations. A linear transformation is a function that takes a vector as input and outputs another vector. Eigenvalues are the scaling factors that correspond to eigenvectors. Eigenvectors are the vectors that are not changed by the linear transformation, except for a scaling factor.

Eigenvalues and eigenvectors can be used to solve systems of linear equations, find the principal axes of a transformation, and determine whether a matrix is invertible. They are also used in a variety of other applications, including:

- Quantum mechanics: Eigenvalues and eigenvectors are used to find the energy levels of an electron in an atom.

- Control theory: Eigenvalues and eigenvectors are used to design control systems that are stable.

- Signal processing: Eigenvalues and eigenvectors are used to analyze the frequency components of a signal.

- Image processing: Eigenvalues and eigenvectors are used to denoise images.

The connection between eigenvalues and eigenvectors and their applications is that eigenvalues and eigenvectors can be used to understand the behavior of linear transformations. This understanding is essential for a variety of applications in science and engineering.

For example, in quantum mechanics, eigenvalues are used to find the energy levels of an electron in an atom. This information is essential for understanding the behavior of atoms and molecules.

In control theory, eigenvalues are used to design control systems that are stable. This is essential for ensuring that systems such as airplanes and robots can operate safely and reliably.

In signal processing, eigenvalues are used to analyze the frequency components of a signal. This information is essential for a variety of applications, such as speech recognition and medical imaging.

In image processing, eigenvalues are used to denoise images. This is essential for improving the quality of images and making them more useful for a variety of applications, such as medical diagnosis and security.

Overall, the connection between eigenvalues and eigenvectors and their applications is that eigenvalues and eigenvectors can be used to understand the behavior of linear transformations. This understanding is essential for a variety of applications in science and engineering.

Frequently Asked Questions about Eigenvalues and Eigenvectors

Eigenvalues and eigenvectors are two important concepts in linear algebra with a wide range of applications. Here are answers to some frequently asked questions about eigenvalues and eigenvectors:

Question 1: What are eigenvalues and eigenvectors?

Eigenvalues are scalar values that scale eigenvectors. Eigenvectors are non-zero vectors that do not change direction when undergoing a linear transformation, except for a scaling by the corresponding eigenvalue.

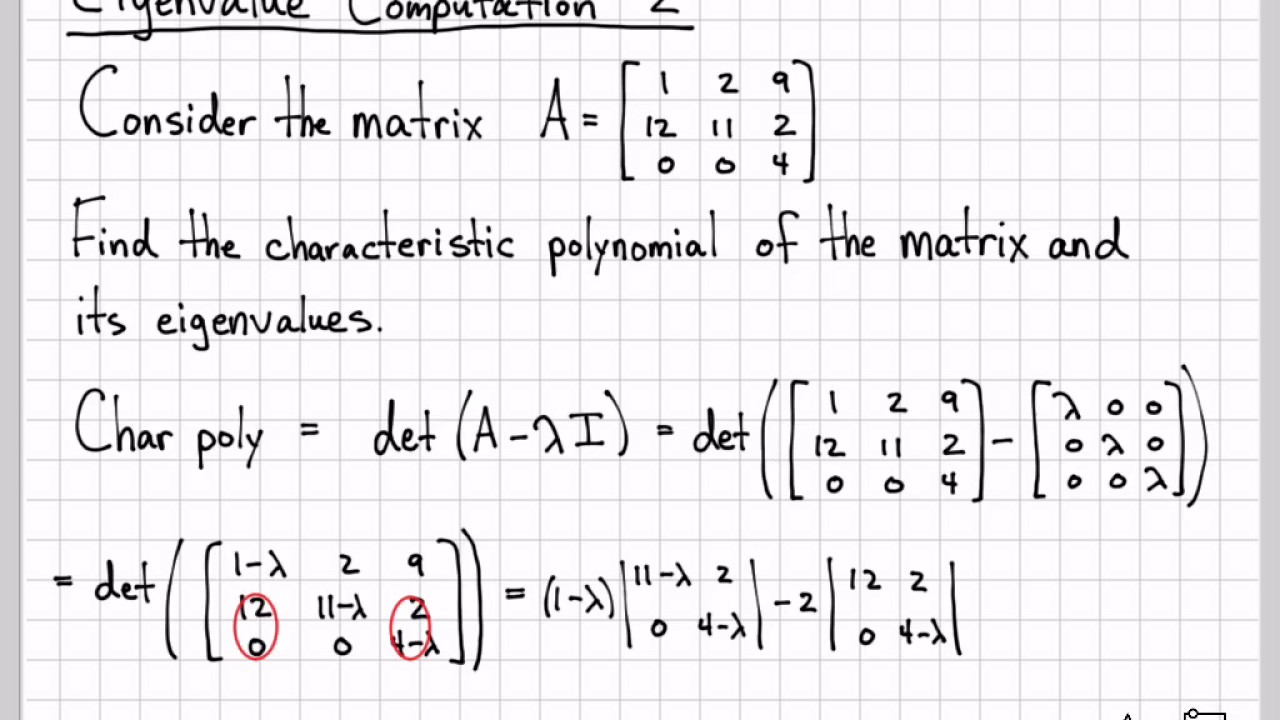

Question 2: How do you find eigenvalues and eigenvectors?

To find eigenvalues, solve the characteristic equation det(A - I) = 0, where A is the matrix and is the eigenvalue. For each eigenvalue, solve the system of equations (A - I)x = 0 to find the corresponding eigenvectors.

Question 3: What is the geometric interpretation of eigenvectors?

Eigenvectors represent the directions along which a linear transformation scales vectors. The eigenvectors of a matrix form a basis for the vector space, and the eigenvalues determine the amount of scaling along each eigenvector.

Question 4: What are the applications of eigenvalues and eigenvectors?

Eigenvalues and eigenvectors have applications in various fields, including: solving systems of linear equations, matrix diagonalization, stability analysis, quantum mechanics, and image processing.

Question 5: How are eigenvalues and eigenvectors related to matrix diagonalization?

A matrix can be diagonalized if it has n linearly independent eigenvectors. The diagonalized matrix has the eigenvalues on the diagonal, and the eigenvectors form the columns of the matrix that transforms the original matrix to diagonal form.

These FAQs provide a basic understanding of eigenvalues and eigenvectors. For more in-depth information, refer to textbooks or online resources on linear algebra.

Conclusion

Eigenvalues and eigenvectors are fundamental concepts in linear algebra with far-reaching applications across diverse fields. They provide insights into the behavior of linear transformations, enabling us to solve complex problems in areas such as quantum mechanics, control theory, and image processing.

The exploration of eigenvalues and eigenvectors problems has deepened our understanding of linear transformations and their impact on vector spaces. Eigenvalues reveal the scaling factors, while eigenvectors represent the directions along which vectors are scaled. This knowledge empowers us to analyze and manipulate linear transformations effectively, leading to advancements in various scientific and engineering disciplines.

Article Recommendations

- Keller Wortham Doctor A Comprehensive Guide To His Life And Career

- Burger King Net Worth 2024 A Comprehensive Analysis

- Exploring The Life And Partner Of Joe Cole Insights Into The Actors Journey